- Implemented a 1D motion kalman filter predicting position and velocity based on measurements

- Further improvised by implementing an Extended Kalman filter for 2D motion. The entire project was coded in C++

- Set up state transition matrix, measurement matrix, covariance matrix and initialized all matrices.

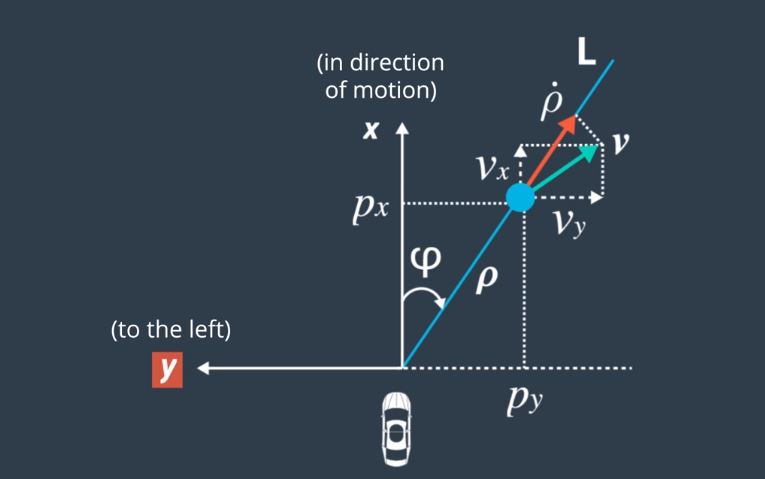

- Radar and Lidar were the main sensors utilized in this project. The image below gives us an understanding of the coordinate system used for the project.

- Set up the kalman filter equations for lidar and for radar measurements, had to utilize the concept of extended kalman filters for linearizing non linear radar measurements by calculating partial derivatives and jacobian matrices.

- Computing partial derivatives, jacobian matrices and understanding the flow from radar and lidar data to finally predicting x, y positions and Vx and Vy velocities.

- Lidar measurements are red circles, radar measurements are blue circles with an arrow pointing in the direction of the observed angle and estimation markers are green triangles.

- Ground truth data was also provided during this project. I used the root mean square error as a metric to understand how well the predictions are from the ground truth data.

- Unscented Kalman Filters work even better for nonlinear motions, that shall be the next step here.

- Currently, actively implementing sensor fusion algorithms with Camera and radar data at Dura Automotive Systems, Michigan.

- Kindly reach out for further details.